In an era where artificial intelligence (AI) is becoming increasingly integrated into our daily lives, the importance of ethical AI cannot be overstated. As organizations leverage AI to enhance decision-making, streamline operations, and improve customer experiences, the ethical implications of these technologies must be at the forefront of discussions. Ethical AI is not merely a buzzword; it represents a commitment to ensuring that AI systems are designed and implemented in ways that respect human rights, promote fairness, and enhance societal well-being.

As leaders in business and technology, it is imperative to recognize that the choices we make today regarding AI will shape the future landscape of our societies.

As AI systems become more autonomous and influential, the potential for unintended consequences increases.

Therefore, it is essential for organizations to adopt a proactive approach to ethical AI, embedding ethical principles into the design, development, and deployment of AI technologies. This commitment not only safeguards against potential harm but also positions organizations as responsible stewards of technology, enhancing their reputation and fostering long-term success. For the latest tech gadgets, Visit iAvva Store today.

Key Takeaways

- Ethical AI is crucial to prevent harm and promote fairness in technology use.

- Addressing bias and ensuring transparency are key to building trust in AI systems.

- Regulation plays a vital role in guiding responsible AI development and deployment.

- Ethical considerations must be integrated into AI applications across sectors like healthcare and law enforcement.

- The future of ethical AI involves overcoming challenges while leveraging opportunities for societal benefit.

Understanding the Risks of Unethical AI

The risks associated with unethical AI are multifaceted and can have far-reaching consequences. One of the most pressing concerns is the potential for bias in AI algorithms, which can lead to discriminatory outcomes. When AI systems are trained on historical data that reflects societal biases, they can perpetuate and even exacerbate these biases in decision-making processes.

For instance, biased algorithms in hiring practices can result in qualified candidates being overlooked based on race or gender, ultimately undermining diversity and inclusion efforts within organizations. Moreover, the lack of transparency in AI systems poses significant risks. When organizations deploy AI without clear understanding or oversight, they may inadvertently create systems that operate in ways that are opaque to users and stakeholders.

This opacity can lead to a loss of trust among consumers and employees alike, as individuals may feel powerless to challenge decisions made by algorithms they do not understand. The consequences of such mistrust can be detrimental, leading to reputational damage and decreased user engagement.

The Role of Regulation in Ethical AI

Regulation plays a crucial role in promoting ethical AI practices across industries.

Effective regulation can help mitigate risks associated with unethical AI by setting standards for transparency, accountability, and fairness.

By establishing clear guidelines for organizations to follow, regulators can foster an environment where ethical considerations are prioritized throughout the AI lifecycle. However, regulation alone is not sufficient. It must be complemented by a culture of ethical awareness within organizations.

Leaders must champion ethical practices and encourage their teams to prioritize ethical considerations in their work. This cultural shift requires ongoing education and training on the ethical implications of AI technologies, empowering employees to make informed decisions that align with organizational values. By fostering a culture of ethical awareness alongside regulatory compliance, organizations can create a robust framework for responsible AI development.

Ethical Considerations in AI Development

| Ethical Consideration | Description | Key Metrics | Measurement Methods |

|---|---|---|---|

| Bias and Fairness | Ensuring AI systems do not discriminate against any group. | Disparate Impact Ratio, False Positive/Negative Rates by Group | Statistical parity tests, fairness audits, bias detection tools |

| Transparency | Ability to explain AI decision-making processes. | Explainability Score, Model Interpretability Index | Use of explainable AI techniques, user surveys on understanding |

| Privacy | Protecting user data and ensuring confidentiality. | Data Anonymization Level, Number of Data Breaches | Privacy impact assessments, encryption audits |

| Accountability | Clear assignment of responsibility for AI outcomes. | Incident Response Time, Number of Accountability Reports | Governance frameworks, audit trails, compliance checks |

| Safety and Security | Preventing harm caused by AI systems. | Number of Safety Incidents, Security Vulnerability Scores | Penetration testing, safety validation protocols |

| Human Control | Maintaining human oversight over AI decisions. | Percentage of Decisions Reviewed by Humans | Process audits, human-in-the-loop system metrics |

| Environmental Impact | Minimizing energy consumption and carbon footprint. | Energy Usage (kWh), Carbon Emissions (kg CO2e) | Energy monitoring tools, lifecycle assessments |

The development of AI technologies necessitates a comprehensive understanding of ethical considerations at every stage of the process. From data collection to algorithm design and deployment, ethical principles should guide decision-making. For instance, organizations must ensure that data used to train AI models is collected ethically and represents diverse perspectives.

This involves obtaining informed consent from individuals whose data is being used and actively seeking out underrepresented groups to avoid perpetuating existing biases. Furthermore, organizations should prioritize explainability in their AI systems. Users must be able to understand how decisions are made by algorithms, particularly in high-stakes scenarios such as healthcare or finance.

By designing AI systems that provide clear explanations for their outputs, organizations can enhance user trust and facilitate accountability. Ethical considerations should also extend to the potential societal impacts of AI technologies, prompting organizations to assess how their innovations may affect various stakeholders and communities.

Addressing Bias and Fairness in AI Algorithms

Addressing bias and ensuring fairness in AI algorithms is a critical challenge that organizations must confront head-on. Bias can manifest in various forms—whether through skewed training data or flawed algorithmic design—and can lead to significant disparities in outcomes for different demographic groups. To combat this issue, organizations should implement rigorous testing protocols that evaluate algorithms for bias before deployment.

This includes conducting audits that assess how algorithms perform across diverse populations and making necessary adjustments to mitigate any identified biases. Moreover, fostering collaboration between interdisciplinary teams can enhance efforts to address bias in AI systems. By bringing together experts from fields such as ethics, sociology, and data science, organizations can gain valuable insights into the complexities of bias and fairness.

This collaborative approach not only enriches the development process but also ensures that diverse perspectives are considered when designing algorithms. Ultimately, addressing bias requires a commitment to continuous improvement and a willingness to adapt as societal norms evolve.

Ensuring Transparency and Accountability in AI Systems

Transparency and accountability are foundational principles for building trust in AI systems. Organizations must strive to create environments where stakeholders can understand how AI technologies operate and how decisions are made. This involves providing clear documentation of algorithms, including their design choices, data sources, and performance metrics.

By making this information accessible, organizations empower users to engage critically with AI systems and hold them accountable for their outputs. Additionally, establishing mechanisms for accountability is essential for addressing potential harms caused by AI technologies. Organizations should implement processes for monitoring the performance of AI systems post-deployment, allowing them to identify and rectify issues as they arise.

This proactive approach not only enhances user trust but also demonstrates a commitment to ethical practices. By fostering transparency and accountability, organizations can create a culture where ethical considerations are prioritized throughout the lifecycle of AI technologies.

The Impact of Unethical AI on Society

The societal impact of unethical AI practices can be profound and far-reaching. When organizations prioritize profit over ethics, they risk perpetuating systemic inequalities and exacerbating social injustices. For instance, biased algorithms used in hiring or lending decisions can reinforce existing disparities in employment opportunities or access to financial resources.

The consequences extend beyond individual lives; they can undermine social cohesion and erode public trust in institutions. Moreover, the proliferation of unethical AI practices can lead to a backlash against technology as a whole. As individuals become increasingly aware of the potential harms associated with biased or opaque algorithms, they may develop skepticism toward AI innovations.

This skepticism can hinder technological progress and stifle innovation, ultimately limiting the benefits that responsible AI could bring to society. To mitigate these risks, organizations must prioritize ethical considerations in their AI strategies and actively work to promote positive societal outcomes.

Building Trust in AI through Ethical Practices

Building trust in AI requires a concerted effort from organizations to demonstrate their commitment to ethical practices. Transparency is key; organizations must communicate openly about their AI initiatives, including how they address issues such as bias and accountability. Engaging with stakeholders—whether customers, employees, or community members—can foster dialogue around ethical concerns and help organizations better understand the expectations of those they serve.

Additionally, organizations should prioritize education and awareness around ethical AI practices both internally and externally. By providing training for employees on ethical considerations in AI development and deployment, organizations empower their teams to make informed decisions that align with organizational values. Externally, engaging with customers through educational initiatives can demystify AI technologies and foster greater understanding of their benefits and limitations.

Ultimately, building trust requires ongoing commitment to ethical practices that prioritize the well-being of individuals and communities.

Ethical AI in Healthcare and Medicine

The application of AI in healthcare presents unique ethical challenges that demand careful consideration. While AI has the potential to revolutionize patient care through improved diagnostics and personalized treatment plans, it also raises concerns about privacy, consent, and equity. For instance, the use of patient data for training algorithms must be conducted with utmost care to ensure that individuals’ privacy is respected and that informed consent is obtained.

Moreover, addressing bias in healthcare algorithms is critical for ensuring equitable access to care. If algorithms are trained on data that reflects historical disparities in healthcare access or outcomes, they may inadvertently perpetuate these inequalities in clinical decision-making. Organizations must prioritize fairness by actively seeking diverse datasets that represent various demographic groups and by implementing rigorous testing protocols to evaluate algorithmic performance across populations.

The Ethical Implications of AI in Law Enforcement and Criminal Justice

The use of AI in law enforcement raises significant ethical implications that warrant careful scrutiny. Predictive policing algorithms, for example, have been criticized for perpetuating racial biases present in historical crime data. When law enforcement agencies rely on biased algorithms for resource allocation or decision-making, they risk exacerbating existing inequalities within the criminal justice system.

To address these concerns, it is essential for law enforcement agencies to adopt transparent practices when implementing AI technologies. This includes providing clear documentation on how algorithms are developed and evaluated as well as engaging with community stakeholders to understand their concerns regarding algorithmic bias. By prioritizing ethical considerations in the deployment of AI within law enforcement, agencies can work toward building trust with communities while ensuring fair treatment for all individuals.

The Future of Ethical AI: Challenges and Opportunities

As we look toward the future of ethical AI, several challenges and opportunities emerge on the horizon. One significant challenge lies in balancing innovation with ethical considerations; as organizations strive to remain competitive in an increasingly digital landscape, there may be pressure to prioritize speed over ethics. However, this approach can lead to detrimental consequences that undermine public trust in technology.

Conversely, there are immense opportunities for organizations that embrace ethical practices in their AI strategies. By prioritizing transparency, accountability, and fairness, organizations can differentiate themselves in the marketplace while fostering positive societal outcomes. Moreover, as consumers become more discerning about the ethical implications of technology, organizations that demonstrate a commitment to responsible practices will likely gain a competitive advantage.

In conclusion, the journey toward ethical AI is ongoing and requires collaboration among stakeholders across industries. By prioritizing ethical considerations at every stage of the AI lifecycle—from development to deployment—organizations can harness the transformative potential of technology while safeguarding human rights and promoting societal well-being. The future of ethical AI is not just a possibility; it is an imperative for leaders who seek to navigate the complexities of an increasingly interconnected world.

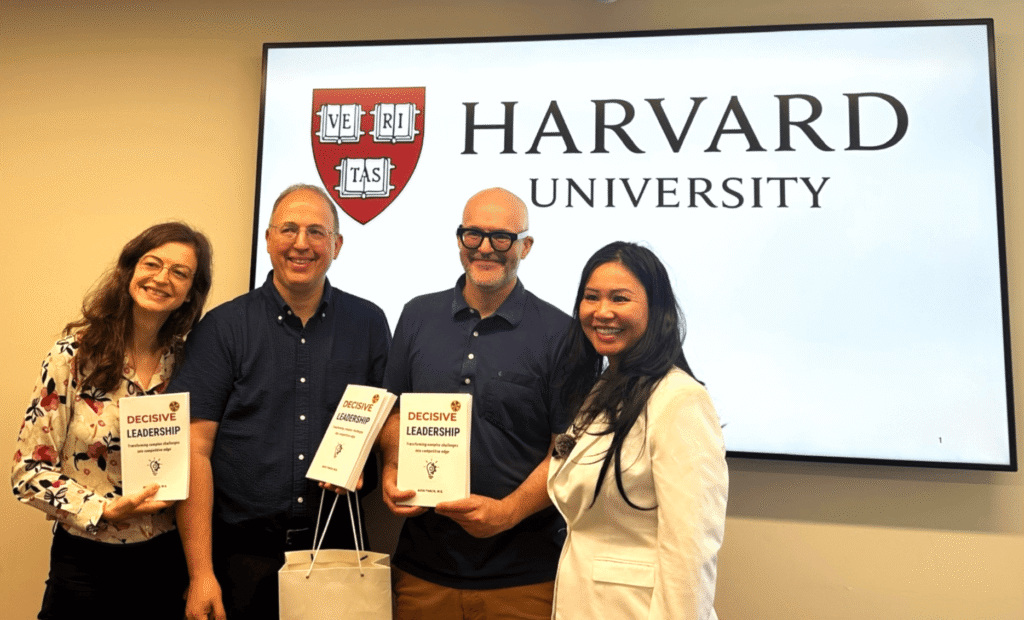

In the ongoing discussion about Ethical AI, it is essential to consider how artificial intelligence is transforming various sectors, including leadership development. A related article that delves into this topic is titled “Executive AI Coaching: How Artificial Intelligence is Shaping Leadership Development,” which explores the implications of AI in enhancing leadership skills and decision-making processes. You can read more about it [here](https://iavva.ai/ai-transformation/executive-ai-coaching-how-artificial-intelligence-is-shaping-leadership-development/).

FAQs

What is Ethical AI?

Ethical AI refers to the development and deployment of artificial intelligence systems in a manner that aligns with moral values, fairness, transparency, and respect for human rights. It aims to ensure AI technologies benefit society without causing harm or bias.

Why is Ethical AI important?

Ethical AI is important because AI systems can significantly impact individuals and society. Ensuring ethical standards helps prevent discrimination, protects privacy, promotes accountability, and fosters trust in AI technologies.

What are common ethical concerns in AI?

Common ethical concerns include bias and discrimination, lack of transparency, privacy violations, accountability for AI decisions, and the potential for AI to be used in harmful ways such as surveillance or autonomous weapons.

How can bias in AI be addressed?

Bias in AI can be addressed by using diverse and representative training data, implementing fairness-aware algorithms, regularly auditing AI systems for discriminatory outcomes, and involving multidisciplinary teams in AI development.

What role does transparency play in Ethical AI?

Transparency involves making AI systems understandable and explainable to users and stakeholders. It helps ensure accountability, allows for informed consent, and enables detection and correction of errors or biases.

Are there guidelines or frameworks for Ethical AI?

Yes, several organizations and governments have developed guidelines and frameworks for Ethical AI, such as the IEEE’s Ethically Aligned Design, the EU’s Ethics Guidelines for Trustworthy AI, and principles from organizations like the Partnership on AI.

Who is responsible for ensuring AI ethics?

Responsibility for AI ethics lies with AI developers, companies, policymakers, and users. Collaboration among these groups is essential to create, enforce, and follow ethical standards in AI development and deployment.

Can Ethical AI prevent misuse of AI technologies?

While Ethical AI principles aim to reduce misuse, they cannot completely prevent it. Continuous monitoring, regulation, and ethical education are necessary to mitigate risks associated with AI misuse.

How does Ethical AI impact AI innovation?

Ethical AI encourages responsible innovation by promoting trust and acceptance of AI technologies. While it may introduce additional considerations and constraints, it ultimately supports sustainable and socially beneficial AI advancements.

What is the future outlook for Ethical AI?

The future of Ethical AI involves ongoing development of standards, increased regulatory oversight, greater public awareness, and integration of ethical considerations into all stages of AI research and deployment to ensure AI benefits all of humanity.

Leave a Reply