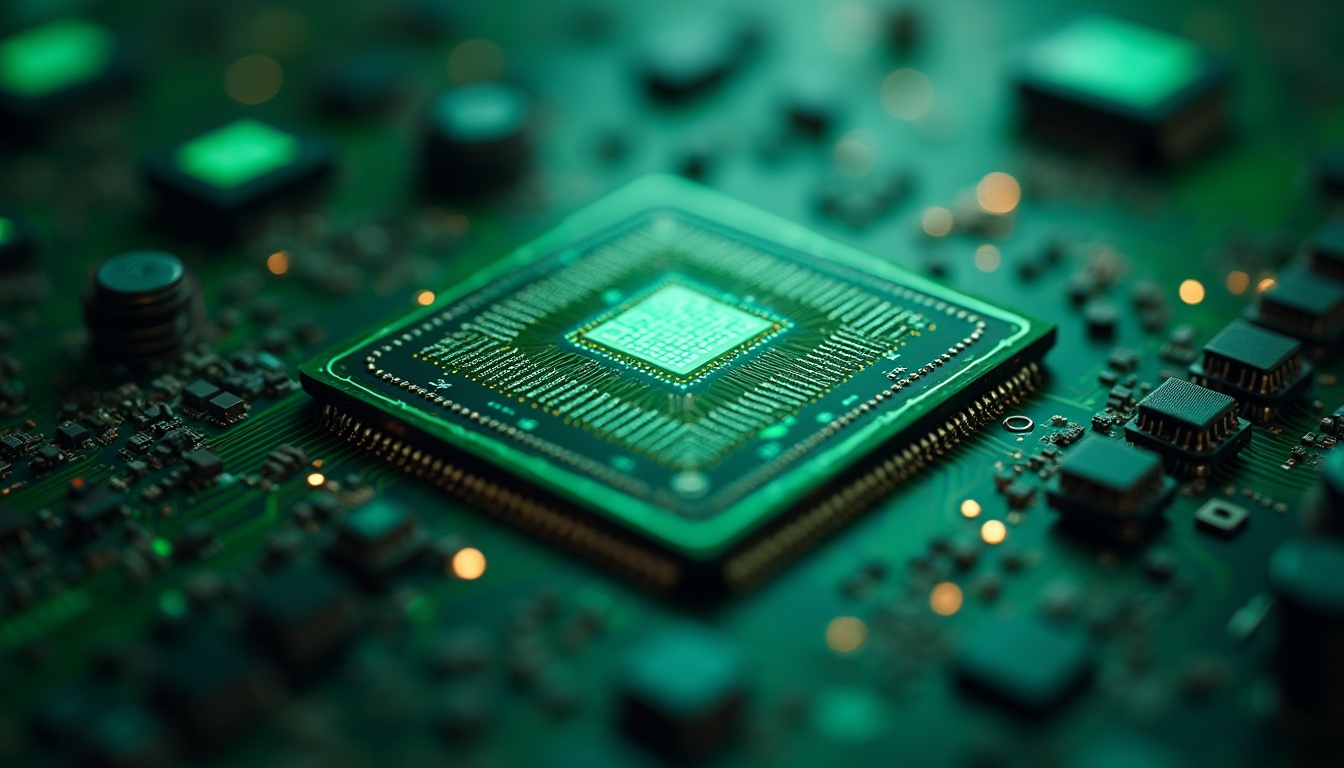

As leaders in technology, talent, and product development, it is critical to grasp the monumental scale of the current AI infrastructure race, which is redefining the very meaning of strategic investment and competitive advantage. OpenAI’s Sam Altman has articulated an ambition that underscores this new reality: securing 250 gigawatts of power by 2033, a figure equivalent to one-third of the peak power consumption of the entire U.S. and requiring the output of 250 nuclear power plants at a hypothetical cost of $12.5 trillion. This isn’t an abstract goal; it’s a direct response to a “compute competition” against well-resourced rivals like Google and Meta, a race where Altman insists, “We must maintain our lead.” The urgency is already palpable, with OpenAI projected to scale its computing capacity from 230 megawatts at the start of 2024 to 2.4 gigawatts by the end of 2025. This tenfold increase has surprised even its partners at Nvidia. This trend extends across the industry, with top competitors now requesting single data center campuses in the 8 to 10 gigawatt range—a scale once considered science fiction, dwarfing the entire 5 GW capacity of a primary cloud provider like Microsoft Azure in 2023. The driving rationale behind these multi-hundred-billion-dollar investments is a foundational belief that larger GPU clusters directly translate to more powerful AI models. Fueled by the rapid advancements in Nvidia’s chip generations like Blackwell and Rubin, this scaling law is no longer just an engineering challenge but a core business strategy, forcing every leader to consider how their talent acquisition, product roadmaps, and infrastructure planning will compete in an era where market leadership may be directly proportional to megawatt capacity..

As artificial intelligence (AI) continues to permeate various sectors, the importance of robust AI infrastructure cannot be overstated. Organizations are increasingly recognizing that a solid foundation is essential for harnessing the full potential of AI technologies. This infrastructure encompasses not just the physical hardware but also the software frameworks, data management systems, and networking capabilities that support AI applications.

Without a well-structured infrastructure, organizations may struggle to implement AI effectively, leading to missed opportunities and inefficiencies. The growing reliance on AI has prompted businesses to invest heavily in their infrastructure. Companies are not only looking to enhance their operational efficiency but also to gain a competitive edge in their respective markets.

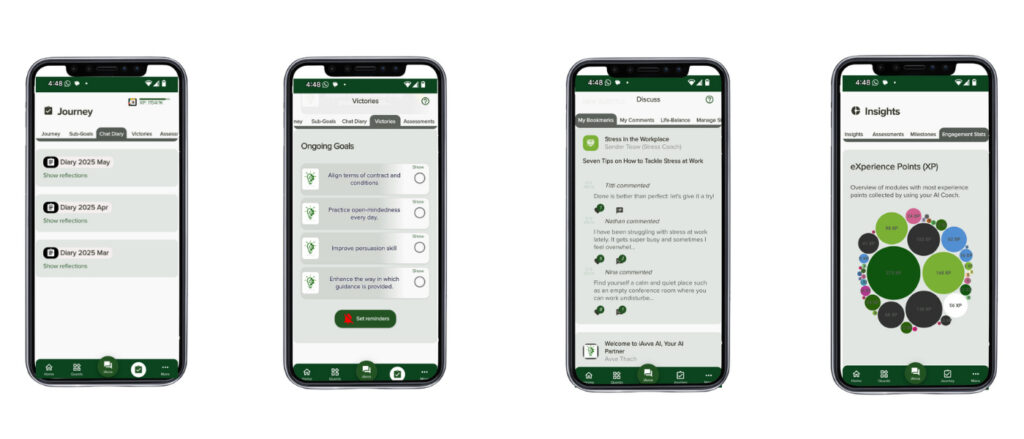

As AI becomes more integrated into business processes, the need for a scalable and flexible infrastructure that can adapt to evolving technologies and demands is paramount. This shift is not merely a trend; it represents a fundamental change in how organizations approach technology and innovation. Download iAvva AI www.iavva.my-ai.coach.

Key Takeaways

- AI infrastructure is becoming increasingly important as organizations rely on AI for various applications.

- Hardware plays a crucial role in AI development, with specialized hardware and accelerators becoming more prevalent.

- AI software and frameworks are constantly evolving to meet the demands of complex AI models and applications.

- AI infrastructure significantly impacts model training and deployment, requiring robust and scalable systems.

- Cloud computing is crucial for AI infrastructure, providing the necessary resources and scalability to support AI applications.

- The true strategic moat in the AI race is not hardware or capital, but the specialized human talent required to design and manage this new technological and organizational infrastructure

The Role of Hardware in AI Development

At the heart of any AI infrastructure lies the hardware that powers it. The performance of AI models is heavily dependent on the capabilities of the underlying hardware. Traditional computing systems often fall short when it comes to handling the complex calculations required for AI tasks.

As a result, organizations are turning to specialized hardware designed specifically for AI workloads. Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs) are prime examples of such hardware, offering significant advantages in terms of speed and efficiency. The choice of hardware can significantly impact the development and deployment of AI models.

High-performance computing resources enable faster training times, allowing organizations to iterate on their models more quickly. This agility is crucial in a landscape where AI technologies are rapidly evolving. Furthermore, investing in the right hardware can lead to long-term cost savings, as organizations can process larger datasets and run more complex models without incurring prohibitive expenses.

The Evolution of AI Software and Frameworks

As hardware has advanced, so too has the software that drives AI development. The evolution of AI software frameworks has played a pivotal role in democratizing access to AI technologies. Open-source frameworks, such as TensorFlow, PyTorch, and Keras, have made it easier for developers to build and deploy AI models without requiring extensive machine learning expertise.

These frameworks provide pre-built functions and libraries that streamline the development process, allowing organizations to focus on solving business problems rather than getting bogged down in technical details. Moreover, the evolution of software frameworks has led to increased collaboration within the AI community. Developers can share their findings, contribute to open-source projects, and learn from one another’s experiences.

This collaborative spirit fosters innovation and accelerates the pace of advancements in AI technology. As organizations adopt these frameworks, they can leverage the collective knowledge of the community, leading to more effective and efficient AI solutions.

The Impact of AI Infrastructure on Model Training and Deployment

| AI Infrastructure | Impact on Model Training | Impact on Model Deployment |

|---|---|---|

| High-performance GPUs | Accelerates training time | Enables faster inference and real-time deployment |

| Distributed computing | Allows parallel processing for large datasets | Supports scalable and efficient deployment |

| AutoML platforms | Automates model selection and hyperparameter tuning | Simplifies deployment with pre-built pipelines |

| High-speed storage | Reduces data loading and preprocessing time | Facilitates quick access to model artifacts for deployment |

The quality of an organization’s AI infrastructure directly influences its ability to train and deploy models effectively. A well-designed infrastructure allows for seamless integration between hardware and software, enabling organizations to optimize their workflows. For instance, efficient data pipelines ensure that training datasets are readily available, while powerful computing resources facilitate rapid model training.

This synergy is essential for organizations looking to stay ahead in a competitive landscape. Furthermore, a robust infrastructure supports continuous integration and deployment (CI/CD) practices for AI models. This means that organizations can regularly update their models with new data and insights, ensuring that they remain relevant and effective over time.

The ability to deploy models quickly and reliably is crucial for businesses that rely on real-time decision-making. In this context, investing in AI infrastructure becomes not just a technical necessity but a strategic imperative.

The Role of Cloud Computing in AI Infrastructure

Cloud computing has emerged as a game-changer for AI infrastructure, providing organizations with scalable resources on demand. By leveraging cloud services, businesses can access powerful computing capabilities without the need for significant upfront investments in hardware. This flexibility enables organizations to experiment with various AI models and approaches without being limited by their physical infrastructure.

Moreover, cloud platforms often come equipped with pre-built tools and services tailored for AI development. These offerings can significantly reduce the time it takes to set up an AI environment, enabling organizations to focus on building and refining their models rather than managing infrastructure. As cloud providers continue to enhance their offerings, organizations can expect even greater efficiencies and capabilities in their AI initiatives.

The Rise of AI-specific Hardware and Accelerators

As the demand for AI applications grows, so does the need for specialized hardware designed specifically for these tasks. Traditional computing systems are often ill-equipped to handle the unique requirements of AI workloads, leading to inefficiencies and bottlenecks. In response, manufacturers are developing AI-specific hardware and accelerators that optimize performance for machine learning tasks.

These specialized components can significantly enhance the speed and efficiency of AI model training and inference. For example, companies like NVIDIA have pioneered GPUs that are specifically designed for deep learning applications, offering unparalleled performance compared to traditional CPUs. As organizations increasingly adopt these advanced hardware solutions, they can unlock new levels of performance and scalability in their AI initiatives.

The Importance of Data Management in AI Infrastructure

Data is often referred to as the lifeblood of AI systems, making effective data management a critical component of any AI infrastructure. Organizations must ensure that they have robust systems in place for collecting, storing, and processing data. Poor data management can lead to inaccuracies in model training and ultimately undermine the effectiveness of AI applications.

Moreover, as data privacy regulations become more stringent, organizations must prioritize compliance in their data management practices. This includes implementing measures to protect sensitive information while still enabling access to relevant data for model training. A well-structured data management strategy not only enhances the quality of AI models but also builds trust with stakeholders by demonstrating a commitment to ethical data practices.

The Role of Networking and Connectivity in AI Infrastructure

In an increasingly interconnected world, networking and connectivity play a vital role in supporting AI infrastructure. High-speed networks enable organizations to transfer large datasets quickly and efficiently, which is essential for training complex models. Additionally, as more devices become connected through the Internet of Things (IoT), the ability to process data at scale becomes increasingly important.

Organizations must invest in reliable networking solutions that can handle the demands of AI workloads. This includes ensuring low-latency connections between data sources, computing resources, and end-users. By prioritizing networking capabilities within their AI infrastructure, organizations can enhance their ability to leverage real-time data for decision-making and improve overall operational efficiency.

The Growing Importance of Edge Computing in AI Infrastructure

Edge computing is emerging as a critical component of modern AI infrastructure as organizations seek to process data closer to its source. By deploying computing resources at the edge—such as on IoT devices or local servers—organizations can reduce latency and improve response times for real-time applications. This is particularly important for industries like manufacturing or healthcare, where timely insights can have significant implications.

The rise of edge computing also addresses concerns related to bandwidth limitations and data privacy. By processing data locally rather than sending it to centralized cloud servers, organizations can minimize the amount of sensitive information transmitted over networks. This approach not only enhances security but also allows for more efficient use of network resources.

The Role of Security and Privacy in AI Infrastructure

As organizations increasingly rely on AI technologies, security and privacy concerns become paramount. The integration of AI into business processes often involves handling sensitive data, making it essential for organizations to implement robust security measures within their infrastructure. This includes safeguarding against cyber threats while ensuring compliance with data protection regulations.

Organizations must adopt a proactive approach to security by incorporating best practices into their AI infrastructure from the outset. This includes implementing encryption protocols, access controls, and regular security audits to identify vulnerabilities. By prioritizing security within their AI initiatives, organizations can build trust with customers and stakeholders while mitigating risks associated with data breaches.

The Future of AI Infrastructure and its Impact on Society

Looking ahead, the future of AI infrastructure holds immense potential for transforming society as a whole. As organizations continue to invest in advanced technologies, we can expect significant advancements in areas such as healthcare, transportation, education, and more. The ability to harness the power of AI will enable businesses to solve complex problems more effectively while driving innovation across various sectors.

However, this transformation also comes with challenges that must be addressed responsibly. As AI technologies become more pervasive, ethical considerations surrounding bias, transparency, and accountability will take center stage. Organizations must prioritize ethical practices within their AI initiatives to ensure that these technologies benefit society as a whole rather than exacerbate existing inequalities.

As leaders in technology, talent, and product development, it is critical to grasp the monumental scale of the current AI infrastructure race, which is redefining the very meaning of strategy and competition. This race is not just about algorithms but also about the immense power measured in gigawatts that fuels these systems. A related article, Unlocking AI’s Disproportionate Returns: Shifting Focus to DPI, delves into how businesses can leverage AI to achieve significant returns by focusing on data processing infrastructure. Understanding these dynamics is essential for staying ahead in the rapidly evolving landscape of AI technology.

FAQs

What is the AI infrastructure race?

The AI infrastructure race refers to the global competition among companies and countries to develop and deploy advanced artificial intelligence technologies. This includes building the necessary hardware, software, and data infrastructure to support AI applications and services.

Why is the AI infrastructure race important?

The AI infrastructure race is important because it will determine which entities have the capability to harness the power of AI for various applications, such as autonomous vehicles, healthcare diagnostics, and financial analysis. It also has significant implications for economic competitiveness and national security.

What are gigawatts in the context of AI infrastructure?

In the context of AI infrastructure, gigawatts refer to the massive amount of computing power required to train and run AI models at scale. This includes data centers, servers, and other hardware components that consume significant amounts of electrical power.

How does the AI infrastructure race impact businesses and industries?

The AI infrastructure race impacts businesses and industries by creating opportunities for innovation and disruption. Companies that can leverage advanced AI infrastructure will have a competitive advantage in areas such as product development, customer experience, and operational efficiency.

What are the challenges of participating in the AI infrastructure race?

Participating in the AI infrastructure race presents challenges such as the high cost of building and maintaining advanced AI infrastructure, the need for specialized talent to develop and operate AI systems, and the ethical considerations surrounding the use of AI technologies. Additionally, there are concerns about data privacy and security in the context of AI infrastructure.

Leave a Reply